Automatic test script generation (in Russian)

15.05.2018

What is neural program synthesis?

12.04.2018

In recent years, Deep Learnig has made considerable progress in areas such as online advertising,, speech recognition and image recognition. The success of DL lets us to change the view on the way the software itself is being created. We can use neural nets to gradualy increase automation in the process oft program creation, and help engineers to get more results with less efforts.

There are a great deal of applications for program synthesis. Successful systems could one day automate a job that is currently very secure for humans: computer programming. Imagine a world in which debugging, refactoring, translating and synthesizing code from sketches can all be done without human effort.

What is Thousand Monkeys Typewriter?

TMT is the system for program induction that generates simple scripts in a Domain-specifil language. The system combines supervised and unsupervised learning. The core is the Neural Programmer-Interpreter, is capable of abstraction and higher-order controls over the program. The system works for error detection in both user logs and software source sode.

TMT also incorporates most common conceprions used today in a field of program synthesis are satisfiability modulo theories (SMT) and counter-example-guided inductive synthesis (CEGIS).

Types of data

There are two types of data (logs) that we are analyzing:

- user logs

- program traces

Supervised and unsupervised

To analyze logs, we are using both unsupervised technique (Donut for user logs), and supervised (engineers mark anomalies in software traces using j-unit tests).

NPI

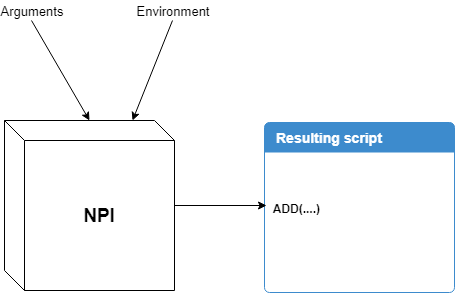

NPI is the core of the system. It takes logs and traces and learns probabilities at each timestep and environment.

Neural Programmer (NPI) consists of:

- RNN controller that takes sequential state encodings built from (a) the world environment (changes with actions), (b) the program call (actions) and (c) the arguments for the called program. The entirety of the input is fed in the first timestep, so every action by the NPI creates an output that is delivered as input.

- DSL functions

- Domain itself where functions are executed (“scratchpad”)

At the time, TMT generates simple scripts for anomaly detection in production logs.

How generator works

DATA

At the moment, we analyze three types of logs: user logs, database logs, software traces.

Detect anomalies

Then, we are trying to detect any problems that logs contain. What exactly are anomalies? Simply put, an anomaly is any deviation from standard behavior.

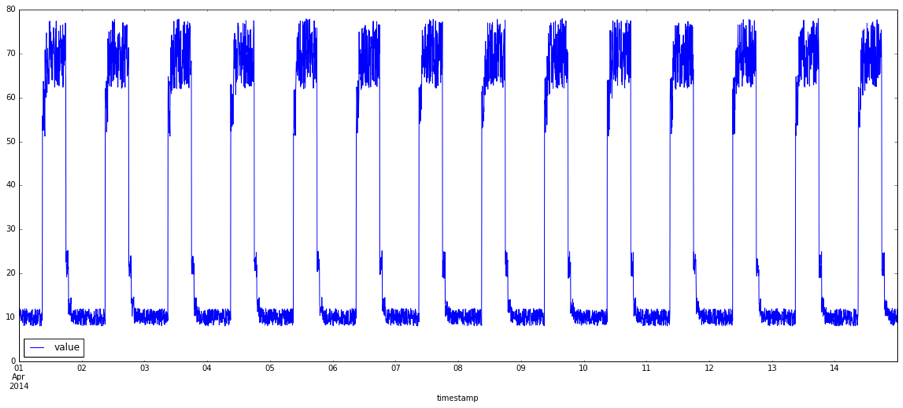

Normal data representation:

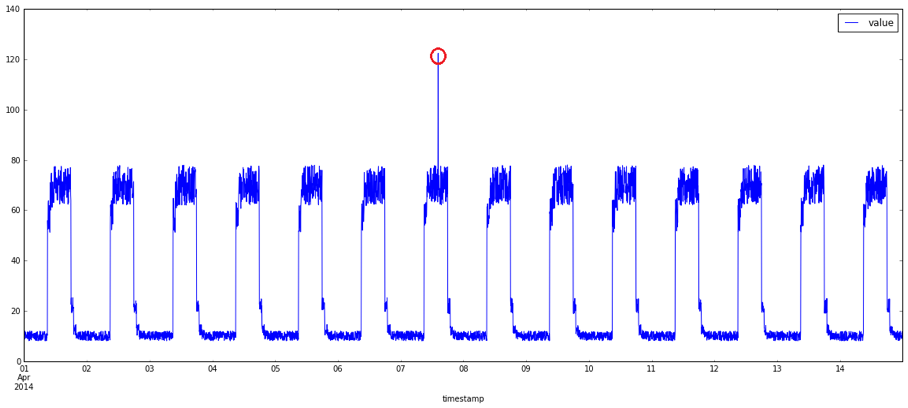

Point anomalies, which are anomalies in a single value in the data:

Query execution time anomalies:

We are aimed to detect anomalies in situtations such as: memory leaks, bottlenecks in Java runtime, server infrastructure problems etc.

As a result, we acquire training data, either labeled manually (supervised), or labeled by automatic classificator (unsupervised).

Train Neural Programmer

After we get a list with labeled normal and abnormal events, we train our core to differ what’s normal and what’s not in trhe future.

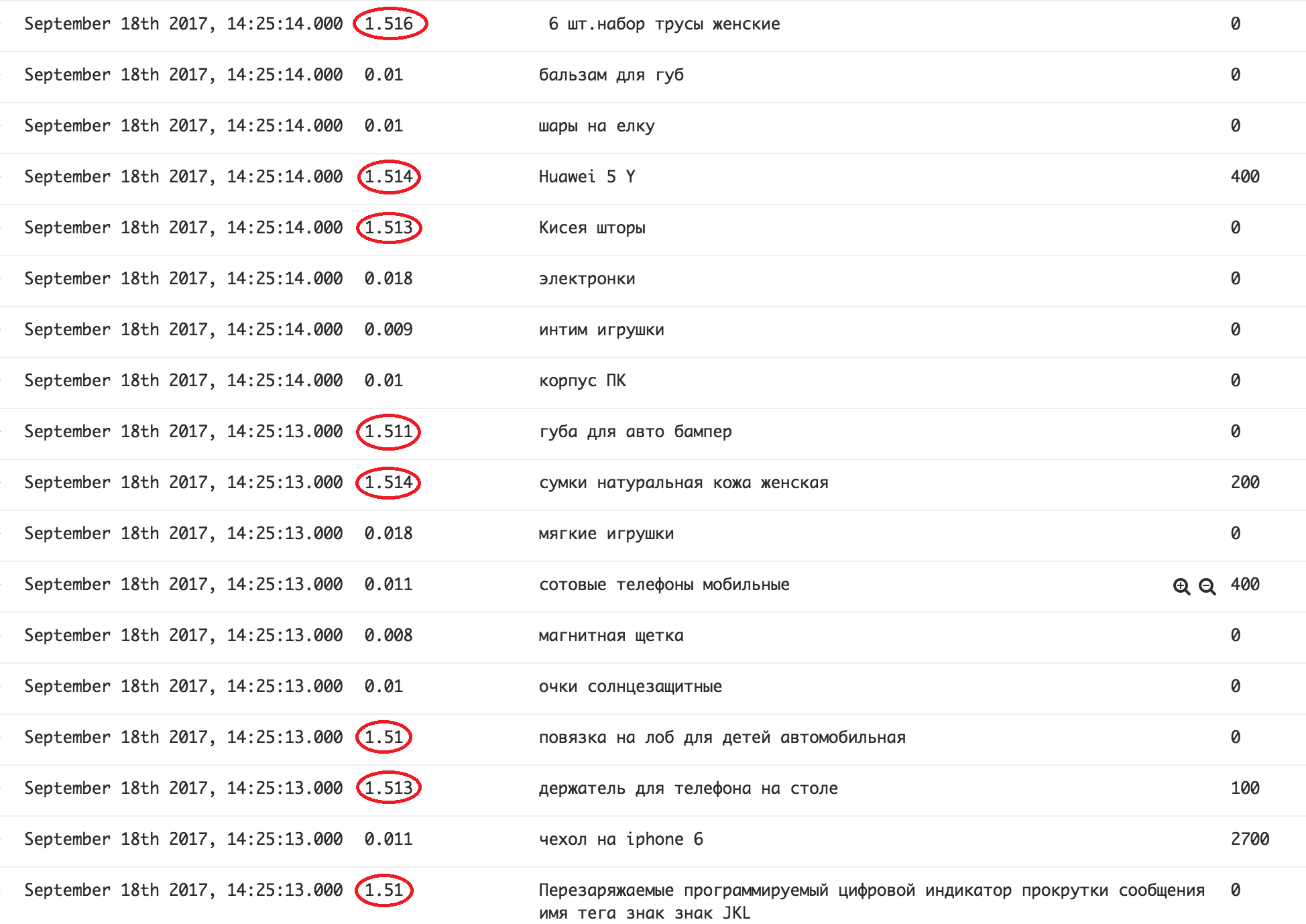

In case of unsupervised learning, the process can be described as “one neural net teaching another”:

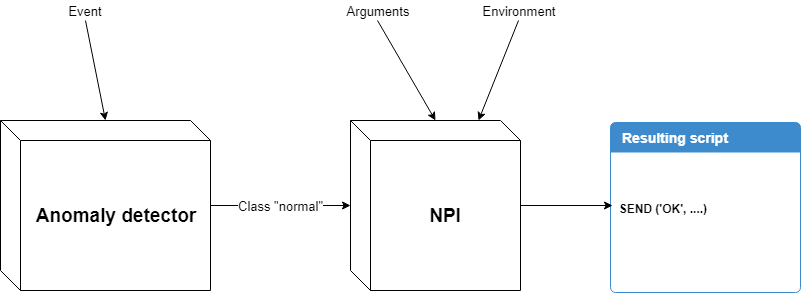

event in log was labeled as normal:

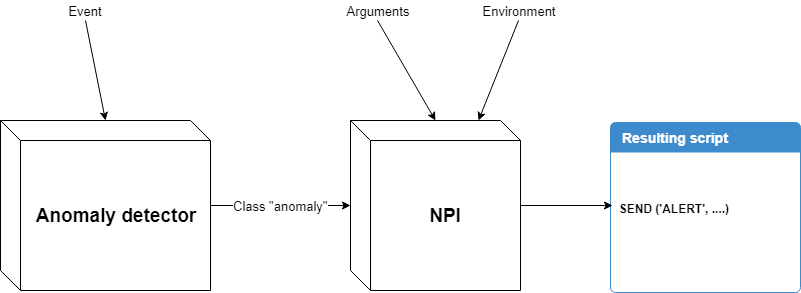

event in log was labeled as abnormal:

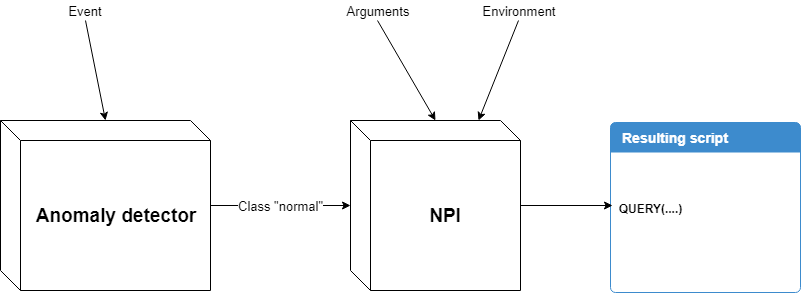

db query was labeled as normal:

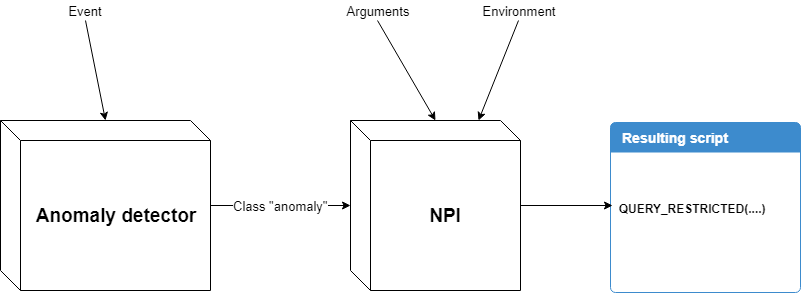

db query was labeled as abnormal:

In some cases, where situations by default are labeled as normal, we have only to decide what command to call next.

Working with the scrpits in runtime

Having trained NPI means that, at each step, we have a predicted operation from argumeents and environment. Thus we expect from a well trained model to predict each command and each step, indicating whether this observed sutuation in logs (software traces) is normal or not. If normal, we expect one outcome, of not - another.

In other words, the model would predict an outcome from given state: label (by default, “normal”), argument and environment. Each combination of this parameters could produce different outcomes.

sample normal runtime script with environment:

BEGIN

DIFF

DIFF

CHECK

MO_ALARM

alert runtime script:

BEGIN

DIFF

DIFF

CHECK

ALARM

Data environment

DIFF ({'program': {'program': 'diff', 'id': 6}, 'environment': {'date1': 15, 'output': 0, 'answer': 2, 'terminate': False, 'client_id': 2, 'date2': 20, 'date2_diff': 45, 'date1_diff': 93}, 'args': {'id': 29}})

Challenges

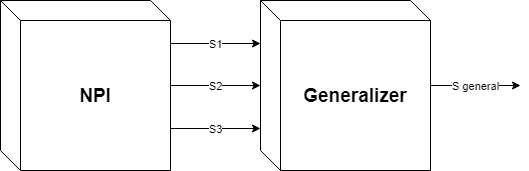

One of the problems with NPIs is that we can only measure the generalization by running the trained NPI on various environments and observing the results. And as we explained earlier, every change of the peremeters can produce a new script.

For the sake of simplicity, we want co create on scipt that will cover many (all) situations:

This means that we are still have to make a module that will merge all possible cripts from this particular NPI into the smallest number of scripts possible, preferably one script.

Examples

References

Deep Learning: A Critical Appraisal

Neuro-Symbolic Program Synthesis

A curated list of awesome machine learning frameworks and algorithms that work on top of source code

Neural programmer concepts

Program Synthesis with Reinforcement Learning (Google)

Bayou (https://github.com/capergroup/bayou)

Anomaly detection

Unsupervised Anomaly Detection via Variational Auto-Encoder for Seasonal KPIs in Web Applications

Anomaly Detection for Industrial Big Data

Faster Anomaly Detection via Matrix Sketching